Security teams adopt AI for speed, but unclear governance and weak human oversight increase risk as automation outpaces accountability and trust.

Anmol Agarwal, Senior Security Researcher at Nokia, outlined a value-first, human-led approach grounded in real-world security operations and her public sector experience.

Leaders build resilience by using AI to scale detection while keeping humans in control through clear guardrails, trusted advisors, and contingency planning.

As AI becomes embedded across security operations, many organizations are learning that speed alone is not a strategy. Machine-driven detection is now a baseline requirement for keeping up with modern threats, but the real challenge lies in how those systems are deployed, governed, and trusted. Too often, the rush to automate outpaces the discipline required to manage risk, define accountability, and keep humans firmly in control.

That tension between capability and control sits at the center of today’s security conversations, and it also runs through Anmol Agarwal's work. A Senior Security Researcher at Nokia and Adjunct Professor at George Washington University, she draws on experience across both industry and government, including time at the Cybersecurity and Infrastructure Security Agency. She also explores many of these same themes in her AI Security Update podcast, where discussions often focus on how organizations can evaluate AI realistically and maintain human oversight in high-stakes decisions.

"AI can recognize attack patterns and types of malware that would have taken far longer to identify through manual analysis," said Agarwal. From there, she strips AI down to its practical role in security. Agarwal framed it as an evolution of data analytics that helps teams detect and understand threats faster and at greater scale, while emphasizing that the real differentiator is not the technology itself, but how it is intentionally applied to reduce risk and improve outcomes.

Show me the ROI: Agarwal urged security leaders to take a value-first approach to AI adoption, starting with a clear definition of the problem and the data required to solve it. AI only earns its place when it meaningfully improves outcomes. "Figure out what problem you’re trying to solve and what data you need to solve that problem," she said. If a simple, manual approach can deliver the same result, she cautioned, adding AI may introduce unnecessary complexity rather than real value.

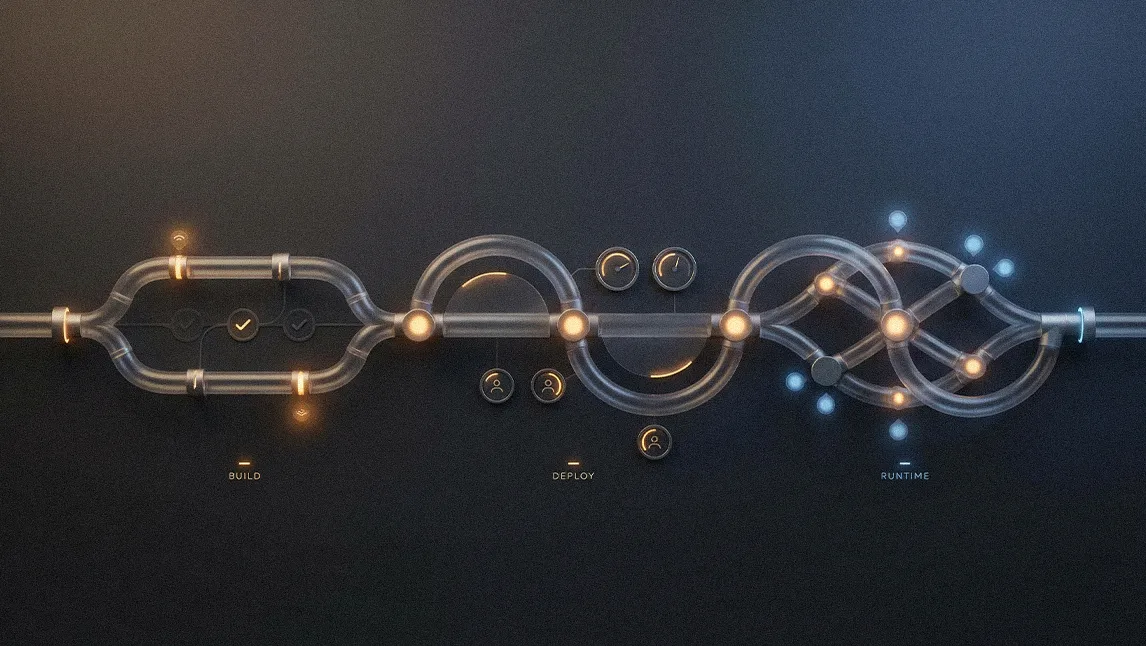

The human firewall: That discipline is reshaping the conversation. Rather than chasing full autonomy, security teams are moving toward hybrid, human-led SOC models where AI augments expert judgment. Agarwal said that AI must be designed with continuous human oversight across training, deployment, and day-to-day use. "AI is a really good tool, but it can hallucinate or make mistakes sometimes."

AI as a starting point: Agarwal framed AI as a way to manage scale, not replace judgment. It can help security teams cut through thousands or even millions of records and surface initial insights, but those outputs still require validation. "Use AI to help with the initial analysis, but then also have humans there to check and see if it’s working the way it should," she advised.

Building this human-centric model hinges on two key investments: the right people and the right guardrails. Agarwal's most insistent advice for leaders feeling overwhelmed is to invest in people first. Within this framework, trust isn’t assumed; it's engineered through hard technical controls that prevent agentic systems from taking unintended actions and having a human ready to verify their outputs.

Invest in your advisors: As security systems become more autonomous, Agarwal said effective leadership depends on trusted advisors and sustained human oversight. Leaders do not need deep technical mastery, but they do need experts who can interpret AI behavior and step in when systems misfire. "Leaders thrive with the right support team around them. You will always need someone there to verify that a system is behaving correctly and not reacting to a glitch." AI’s fallibility is precisely why human judgment remains essential, transforming security roles rather than replacing them.

Ultimately, as attackers increasingly weaponize AI, automation is no longer optional. In an emerging AI-versus-AI reality, speed becomes a prerequisite for effective defense. But Agarwal is clear that speed alone is not resilience. The real advantage comes from designing systems where automation accelerates response while human judgment retains control, backed by clear plans for when technology falls short. In her view, the future of security is not about trusting machines blindly, but about building safeguards around them.

"You need automation because you don’t have weeks or months to respond, you have minutes. But no model is 100% correct, so you always need a contingency plan for when something goes wrong," she concluded.

The views and opinions expressed are those of Anmol Agarwal and do not represent the official policy or position of any organization.

.svg)