Key Points

Enterprises face stalled AI adoption because they lack the security, data hygiene, and oversight needed to support AI-native development.

David B. Cross, CISO of Atlassian, said AI must follow the same Security Development Lifecycle as any other system, with teams upskilled to use it safely and effectively.

Cross outlined a path forward that pairs continuous, AI-driven security with a human in the loop to keep critical decisions accountable and reliable.

Enterprises are moving past the novelty of simply using AI and into the far more challenging work of becoming truly AI-native. That shift, where the technology is no longer a bolt-on layer for productivity but a foundational element of how code is created, reviewed, and secured, raises new questions. How can enterprises effectively build security, compliance, and human oversight into workflows that now run on intelligent systems?

David Cross, CISO of Atlassian, has spent more than twenty years leading security at scale across companies like Google Cloud and Microsoft. His background spans cloud security, identity, and enterprise risk, a mix that gives him a sharp, practical view of how AI is reshaping software development. Cross sees the industry at a turning point, where humans and machines are beginning to trade roles in how code is created and secured.

"Humans used to write all the code while AI validated it. Now AI writes the code, fixes the vulnerabilities, and keeps it compliant, and humans step in as the final check," said Cross. The result is a transformation of security from a periodic, manual chore into a continuous, automated motion. The old model of a once-a-year pen test is giving way to a system that finds and fixes issues in real-time, while also evolving to counter modern threats like prompt injection. It’s what finally makes the long-held dream of "shifting left" a practical reality, tackling persistent issues like technical debt and brain drain by giving every developer an expert assistant.

Legacy lifesaver: "Not every developer can specialize in security, privacy, or compliance, but AI can enforce those standards automatically," Cross explained. "It can even repair legacy code long after the original developer has moved on, because it doesn't need prior familiarity to understand what's broken."

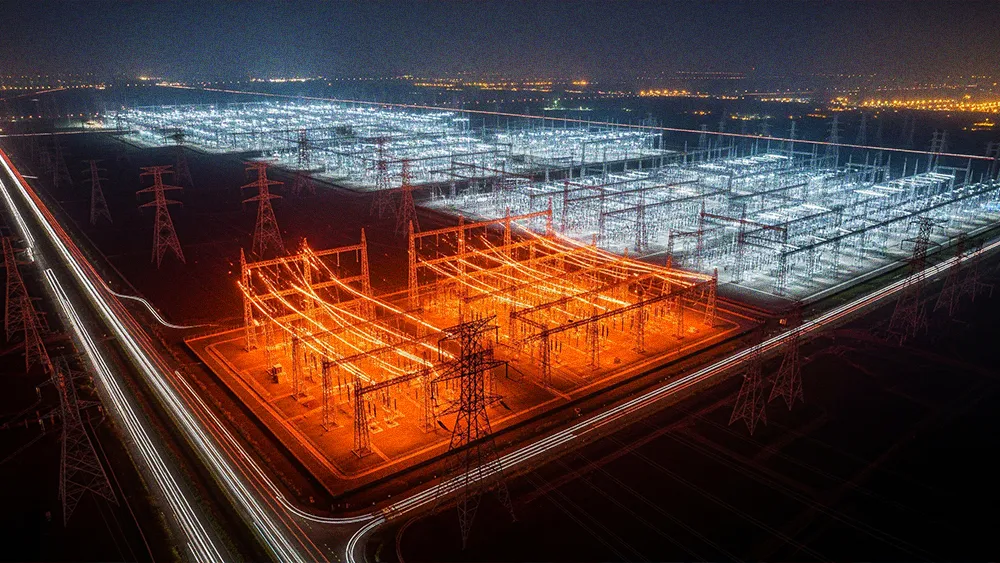

Hype check: But before companies can realize this vision, they have to confront a few inconvenient truths. A paradox has emerged where organizations are pouring massive investments into AI while struggling to see, let alone quantify, the returns. According to Cross, the problem often begins because leaders fail to start with a frank assessment of the business case. "You need to think about the value proposition and what you want to achieve," he said. "You have to realize that some of it will be experiments, and sometimes, using AI can actually be more costly than doing it manually."

Once the strategy is set, the biggest hurdles are often found in an organization's pre-existing data infrastructure and skills gaps. For many, these foundational gaps are where AI initiatives stall. But Cross suggests this is precisely where a thoughtful strategy can turn the problem on its head, using AI to solve the very issues that block its own adoption.

Data disarray: "A big element where organizations get blocked is that they don't have their data security, data labeling, and classification in place. When you interject AI, you might find that people now have access to things they never should have had access to before. The reality is, they always had access; they just didn't know about it." To avoid this, Atlassian built an underlying data graph called the Teamwork Graph, which understands and respects all of an enterprise's permission structures, allowing customers to use AI with confidence.

Literacy wins: Organizations that invest in baseline literacy are better positioned to accelerate past those that don't. For Cross, the solution is to stop treating AI like a mystical new force and start treating it like any other technology. "AI doesn't need a special set of rules. We've always had a Security Development Lifecycle, and the same thing applies to AI. It's no different," he noted. Cross warned that problems appear when teams build agents on the side without following that process. "A person in marketing might do their own vibe coding and create an agent without knowing the SDLC, but once they're plugged into the process, the work becomes straightforward and the risks drop quickly."

Achieving this AI-native state is reshaping how success is measured. Atlassian, for example, has moved its focus from chasing ambiguous ROI to prioritizing company-wide adoption as its key metric. The company's acquisition of DX reinforces the strategy, offering enterprises a way to better quantify the productivity gains from their engineering investments.

Two hats for the CISO: Pushing for mass adoption is forcing the CISO's role to evolve into a dual mandate. "First, we provide security for AI, where for every team adopting it we have the security, policies, and guardrails in place," Cross explained. "The other is using AI for security. As soon as information on a new threat is available, our AI is automatically building the detections for us."

A time-tested doctrine: As automation expands, security teams face not just more alerts, but alerts that land without context. To avoid this, Cross falls back on a core principle from his experience as a military veteran. "There's a principle where you always have a human in the loop for critical decisions as a QA check. Even as we do things automatically, we always have that human QA check. It's the same principle we use in military and commercial aviation, and it's very important in everything that we do."

Ultimately, the path forward suggests that technology, security, and culture be treated as a single, cohesive strategy. "Just as AI should be incorporated natively, so should security. And doing them together is really how we will all be successful in the next few years," Cross concluded.

.svg)