Key Points

As enterprise AI moves into production, unpredictable behavior like drift and inconsistent outputs makes static, after-the-fact compliance ineffective and raises operational risk.

Siva Paramasamy, Global Head of Developer Experience Platform Engineering at Wells Fargo, explained that governance now succeeds only when controls are embedded across model and agent lifecycle in delivery pipelines.

He outlined a practical path forward that combines strong data governance, platform-ready DevOps pipelines, customized training, and human-in-the-loop oversight to make AI scalable and audit-ready.

The views and opinions expressed are those of Siva Paramasamy and do not represent the official policy or position of any organization.

Enterprise AI has left the sandbox and entered production, bringing with it a level of unpredictability that traditional compliance models were never designed to handle. As models drift, outputs vary, and agents act across real business workflows, governance is being pulled out of policy decks and into execution. What now defines AI maturity is whether controls are built directly into model lifecycles, agent development, and delivery pipelines, turning governance into a daily operational function rather than a periodic check.

Siva Paramasamy is the Global Head of Developer Experience Platform Engineering at Wells Fargo, leading enterprise GenAI and platform engineering initiatives inside one of the world’s most regulated environments. A C-level technology executive with deep engineering and enterprise architecture roots, his career spans large-scale platforms at Sprint through to patented, AI-driven systems and multi-year transformations. That end-to-end experience informs his focus on governance that works in production, not just on paper.

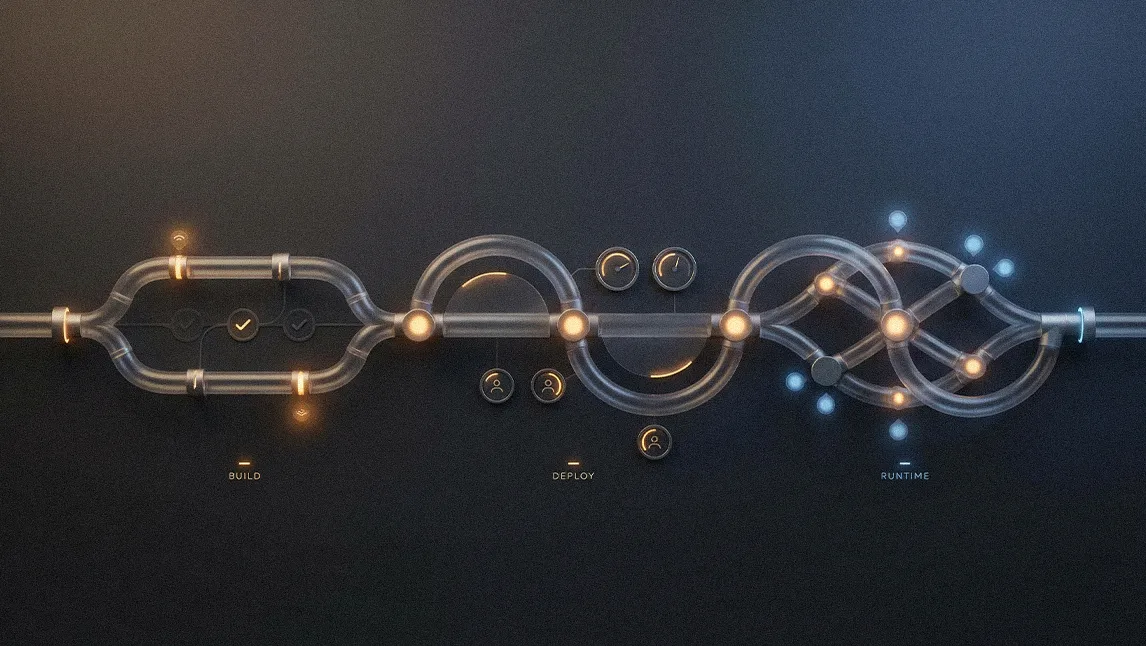

"There are two priorities. The first is the data you use to build context or tune the model, because data governance and data lineage are the foundation. The second is investing in the platform itself, your SDLC and DevOps toolchain, so you can govern agents, models, and prompts and monitor drift at build, deploy, and run time," said Paramasamy. Together, those priorities define what operational AI governance actually looks like once models and agents are embedded into everyday delivery workflows.

Low risk, high drift: Paramasamy framed the push toward hands-on governance as a response to AI’s fundamental unpredictability, comparing the moment to the mid-1990s scramble to govern the early internet. Because large language models do not behave consistently, after-the-fact compliance checks break down in practice. His answer is to extend the Risk and Control Self-Assessment (RCSA) framework into a full lifecycle discipline for models and agents, with controls embedded from build through runtime. "When a model is drifting or a prompt is not producing consistent results, that is a drift issue, and it requires controls like alerting and human-in-the-loop oversight, because even today’s low-risk use cases are already drifting in production," he explained.

Obstacles in execution: But having a framework is only the starting point, because execution at enterprise scale is where most AI programs stall. Integrating fast-moving GenAI workflows into legacy development lifecycles introduces operational friction, and that challenge is compounded by a widespread skills and knowledge gap. "The difficulty of adapting existing software development lifecycles for GenAI, combined with the lack of necessary skill sets and general knowledge, is what slows adoption," Paramasamy said, adding that organizations cannot rely on hiring alone because "everyone is trying to do the same thing."

That challenge is precisely why a strategic investment in education is seen as a key part of the solution. Driving broad adoption and making sure usage is safe requires a customized approach that goes far beyond generic, company-wide training modules. Closing the growing AI governance gap means transforming governance from a static policy into an operational reality for business and technology teams.

One size fits none: Paramasamy tied adoption directly to how well organizations invest in targeted education, warning that generic enablement programs fall flat once AI reaches different functions. "It is not a one-size-fits-all training," he said. "Every enterprise uses GenAI differently, whether for coding, optimizing business processes, or supporting operations teams, and training has to be customized for each group if adoption is going to stick." In his view, governance only works when people understand how AI fits into their specific workflows, not when it is introduced as a broad, abstract capability.

Built to scale: From there, the focus shifts to the two architectural pillars that make governance scalable: data and platforms. While the growing emphasis on data quality and lineage is reshaping corporate strategy, Paramasamy argues that the platform matters just as much. "You need to invest in the platform itself so your delivery and observability pipelines can govern models, prompts, and agents across the entire lifecycle," he noted, describing a unified operating layer that can orchestrate human and AI workflows while enforcing controls at build, deploy, and runtime.

AI governance is settling into its next phase as a continuous operational discipline, not a one-time compliance exercise. Regulation is still taking shape globally, with some governments moving early and others locked in debate, but that uncertainty is precisely the point. Organizations cannot afford to wait for perfect rules before acting. The ones that hold up are building for resilience now, embedding explainability, traceability, and control directly into how AI systems are designed, deployed, and monitored. The shift also demands a different leadership posture, one less focused on managing people in isolation and more on orchestrating human and AI workflows together.

Paramasamy reduced that future-ready posture to two principles that anchor governance in reality. "Human-in-the-loop is important in any use case, whether for coding or business process automation," he said. "If you don’t have it, you will get burned by lawsuits down the line." He paired that warning with a longer-term view of accountability, adding, "You have to make sure your solutions account for ethics and bias and that fairness is considered when you build them, otherwise you may have something that works today but fails when it gets audited a year from now." Together, those guardrails frame governance not as a brake on innovation, but as the mechanism that allows AI to scale safely, defensibly, and for the long haul.

.svg)