Key Points

Always-on AI features pushed through routine vendor updates expand healthcare risk after deployment, exposing PHI and sensitive data outside traditional procurement and governance controls.

Ed Gaudet, CEO and Founder of Censinet, explained that AI accelerates an already unmanageable third-party attack surface, where static risk assessments and old certifications no longer reflect reality.

Gaudet defined the solution as a shift to secure-by-design, secure-by-default AI, with opt-in activation, granular controls, continuous lifecycle governance, updated contracts, and cross-functional oversight.

Many healthcare leaders think AI risk starts at procurement. Increasingly, it shows up after deployment. Major software vendors are quietly embedding always-on AI into routine updates, often with little notice or control. In healthcare, that shift creates new exposure paths for protected health information (PHI) and sensitive business data, as AI capabilities are activated inside tools long after they’ve been approved.

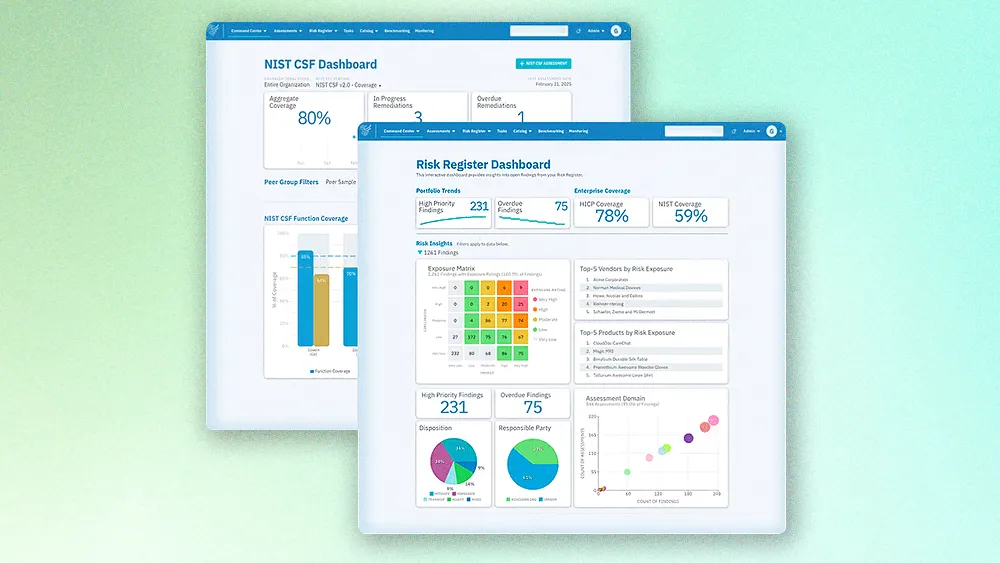

Ed Gaudet brings deep experience to this emerging risk. As CEO and Founder of Censinet, a collaborative cloud platform for healthcare third-party risk management, he draws on more than 30 years of experience building enterprise software, including multiple IPOs and acquisitions. Through his work and his Risk Never Sleeps podcast, Gaudet focuses on how risk quietly accumulates across modern healthcare environments.

According to him, the industry needs to reset its expectations by demanding a new architectural model. "Companies have to require that third parties build AI securely by design and secure it by default," said Gaudet. His proposed opt-in approach, where AI is built-in but remains dormant until a customer chooses to activate it, is a powerful way of giving control back to the healthcare organization.

The risk is coming from inside the house: The AI threat doesn’t introduce a new problem so much as it speeds up one that was already getting out of control. "Before AI, healthcare organizations were already struggling with scale," Gaudet noted. "The average hospital is running on well over a thousand third-party products, and that alone makes static, point-in-time risk assessments ineffective." That scale creates a leadership blind spot. "Most governance models are still focused on what’s coming in the front door. But the real risk lives in the vendors already in the environment, quietly changing the attack surface through routine updates."

Flying blind: AI turns that quiet change into something more volatile. "When vendors can add new models or capabilities every few weeks, a SOC 2 report from last year stops being proof of anything," he continued. "The product you approved is no longer the product you’re running, and most organizations don’t have visibility into how much has changed."

Lawyering up for AI: Just as importantly, Gaudet stressed that legal and contractual agreements should be updated to hold vendors accountable for responsible AI. "You must review your contractual agreements with third parties. If they've added AI, do your contracts need to be updated accordingly? You have to examine your limited liability and your overall coverage as it relates to cyber insurance."

To pivot from that reactive posture, Gaudet laid out a blueprint for CIOs, starting with a mastery of technical frameworks and clear architectural demands. He advised leaders to ground their strategy in established standards to assess and manage vulnerabilities. That discipline is especially important in healthcare, where the presence of PHI greatly raises the stakes. He stressed that architectural containment is a foundational principle and advocated for granular controls. The approach allows organizations to activate specific AI features tied directly to approved use cases, thereby avoiding forced, all-or-nothing implementations.

Building on the basics: "Start by understanding the AI threat landscape," Gaudet advised. "From there, it's important to select the right assessment framework to assess the product, whether it's OWASP, the NIST AI Risk Management Framework, or a combination of the two."

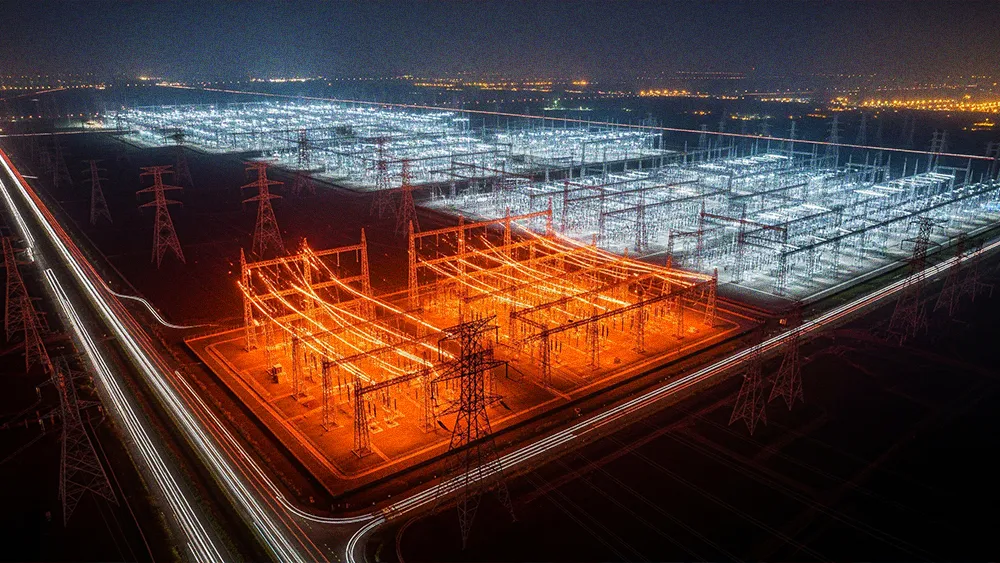

Location, location, location: "You have to know exactly what data flows through a tool, where it goes, and how it’s used, especially when that data includes PHI governed by HIPAA," he continued. "That’s why we built a self-contained environment. You can’t afford to have sensitive data sent to an unknown location, particularly offshore."

Flipping the switch: That control has to extend down to the feature level. "AI can’t be an all-or-nothing switch. Organizations need the ability to turn specific capabilities on or off based on approved use cases, whether that’s one feature or twenty. That use-case-driven control is what makes governance workable."

Effective AI governance, Gaudet said, can’t live inside a single function. "It has to extend beyond IT and security to include groups like patient advocacy, nursing, clinical teams, and data informaticists. When you add it up, there are easily 10 to 15 stakeholder groups that need to be part of the conversation."

The implication is that enterprise AI governance must evolve beyond a one-time checkpoint for new software to become a continuous, lifecycle-based discipline that covers every application already in the environment, addressing both GenAI strategy blind spots and growing AI technical debt. That reality, he concluded, points toward a new benchmark for the industry, arguing that secure-by-design and secure-by-default should become the new procurement bar.

.svg)