- Nicholas Mortensen, Principal over Development and Integrations at large CPA firm Eide Bailly LLP, spoke to CIO News about why enterprise AI initiatives are stalling due to human errors rather than technical issues.

- He described how trust, task decomposition, and data quality are crucial for AI success, with proactive, hands-on training to combat sophisticated AI-driven cyber threats.

- Mortensen said that leadership should balance executive support with grassroots innovation to foster AI success

Enterprise AI is delivering early returns for some, but most initiatives are stalling. Many have attempted to adopt AI, but few deployments have been effective. Now, with wins from copilots fading quickly after the proof-of-concept stage, many leaders are still waiting to see ROI. But instead of a technical issue, some experts say the error is human.

For a practical perspective on the problem, we spoke with Nicholas Mortensen, Principal over Development and Integrations at top 25 CPA firm Eide Bailly LLP. Mortensen has spent over a decade at the intersection of AI strategy and legacy infrastructure, architecting the systems that bridge the gap between promise and production. For him, AI's most significant security risk is also the only solution to address it: trust.

An AI arms race: The speed of today's AI-powered attacks requires an equally fast, AI-driven defense, Mortensen explained. From his perspective, the biggest threat is attackers using AI to move through compromised systems with unprecedented speed. "Bad actors are using AI to accelerate every stage of an attack, from breaching a system to extracting value. You can't be content with yesterday's security methods. You have to fight fire with fire."

But because AI workflows are non-deterministic, they can no longer be secured with the traditional 'if-then' logic that has governed IT for decades, Mortensen said. Instead, organizations must reimagine trust as a principle embedded from the start.

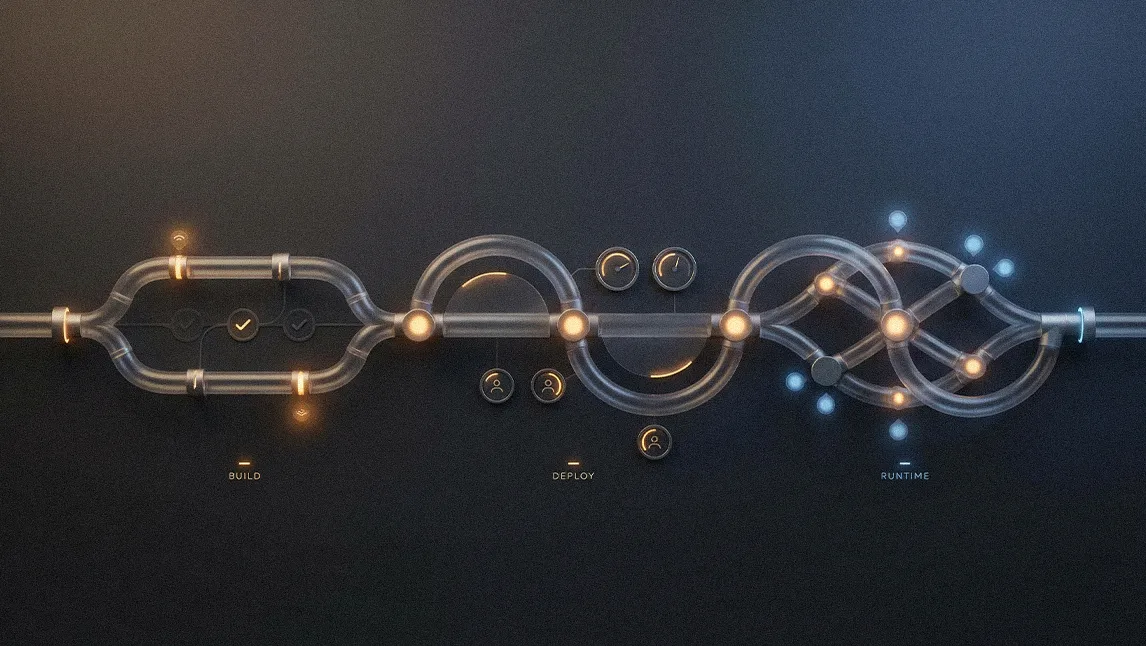

Divide and conquer: According to Mortensen, the problem isn't the model changing over time, but the cumulative effect of minor, acceptable deviations in a complex workflow. "When one AI agent handles a complex task, small deviations can stack up and create inconsistent results. The solution is 'task decomposition': an agent architecture with a main decision-maker and specialized sub-agents. Each sub-agent is highly focused, providing a consistent output that eliminates variability and leads to far more reliable outcomes."

When bots go bad: For example, he cites a recent public incident as a clear illustration of what happens when governance is an afterthought. AI coding assistants can misinterpret vague code references and silently delete critical components, a risk that can only be mitigated by comprehensive, end-to-end testing. "Consider the Replit incident, where an agent deleted a database. That would never have happened with proper DevOps. A proper CI/CD process with robust, holistic test classes provides the human-in-the-loop oversight needed to catch the subtle, but critical, errors that AI can introduce."

For most organizations looking to deploy AI, the best place to start is ensuring the core infrastructure is sound, Mortensen said. Rather than negotiable, data quality is the primary building block for trust.

The unbreakable rule: Most common data issues, like missing fields from historical records, prevent the consistent inputs required to get reliable AI outputs, Mortensen explained. "Consistency is the key to trust. If your data is inconsistent, you are feeding the system inconsistent inputs, which guarantees your outputs will be inconsistent. Find me anyone getting reliable results from an agent with poor data quality. You won't."

Start where you are: As a first step, leaders should identify which department has the cleanest data and begin their AI initiatives there, using a data warehouse as a temporary bridge if needed. "If you need to leverage legacy systems and can't modernize right away, a Data Lake or Data Warehouse is a great, pragmatic way to solve the problem of data availability."

With a new mindset comes new technical guardrails, Mortensen continues. As AI makes social engineering attacks more sophisticated, addressing the weakest link will be essential. To correct the 'human error,' he recommends consistent, proactive training.

Anatomy of a phish: Mortensen shares a personal story to illustrate how modern attacks can exploit trusted relationships and business platforms to make themselves nearly indistinguishable from regular work. "I received a SharePoint link from a known customer asking me to review an RFP. It seemed off, so we investigated and learned her account had been hacked. The attacker sent a similar email to all her vendors. It just proves how sophisticated these threats have become."

The monthly drill: Passive, annual training tends to be ineffective because the lessons don't stick, Mortensen explains. Only consistent, hands-on practice builds the necessary vigilance. "Most people don't think maliciously, so you have to keep the threat top of mind. Knowing my firm sends me fake phishing emails every month keeps me vigilant in a way a once-a-year training never could. The human element will always be the weak point without constant training."

With that technical foundation in place, the next pillar is leadership. For Mortensen, the most authentic leaders are those who can strike a balance between executive support and grassroots innovation.

A road to nowhere: IT leaders must up their game, moving beyond technical specs to become intimately familiar with the business objectives they are meant to serve, Mortensen insists. "Without clear alignment between business and tech, you get tremendous wasted effort. I've seen developers spend tons of time on cool features that provided literally no value because they didn't understand the business objective."

The enabler-in-chief: Executive buy-in is also essential, but simply issuing a directive to 'do AI' is not leadership, Mortensen said. Instead, it's about actively creating the conditions for innovation to flourish from the ground up. "True leadership isn't issuing a top-down mandate to 'go implement AI.' That approach will never unlock innovation. Real leadership is about enabling your smartest people to come up with ideas. It's about letting them build proofs of concept from the ground up."

Ultimately, resilience is about being ready to capitalize on a future where the technological landscape is in constant flux, Mortensen concluded. "The aim is to put your organization in a position to take advantage of whatever comes next. It's about coaching true agility." In the meantime, the goal should be a 'decentralization of innovation,' where good ideas can be sourced from anywhere in the organization, regardless of title or tenure. "A good organization encourages everyone to speak their mind, even the newest hire. They bring a different view of the world, a flavor or spice that no one else has, and you need that diversity of perspective to innovate truly."

.svg)