- The race to deploy AI has created significant business and customer risks, as leaders are often forced to gamble on black-box solutions they don't fully understand.

- According to Aaron Weller, HP's Leader of the Privacy Innovation & Assurance CoE, Aaron Weller, companies need a new standard of accountability to shift governance from a reactive chore to a strategic enabler of innovation.

- Weller explained that effective governance requires both technical transparency and an understanding of an AI's potential applications, creating resilient systems that can adapt to future challenges.

A new standard of accountability is emerging in the enterprise when it comes to deploying intelligent AI systems. A gold rush mentality has taken hold at the expense of pragmatism, creating a landscape of duplicated effort, fragmented strategies, and elevated risk. While some vendors promise transformation, they often deliver opaque, black-box point solutions, leaving leaders to gamble on tools they don't fully understand. Instead, the future of AI adoption hinges on a radical reframing of transparency, treating every AI tool not as magic, but as a financial asset that requires its own "balance sheet".

CIO News spoke with Aaron Weller, Leader of the Privacy Innovation & Assurance CoE at HP, to understand how enterprise leaders can navigate this chaotic new era. A veteran of the tech industry’s biggest shifts, Weller has spent over 20 years at the intersection of business strategy and risk management, having led privacy regionally for PwC, supported eBay through the GDPR transition, and co-founded two security and privacy startups. His career has been defined by the challenge of managing personal data in ethical ways to drive business outcomes, giving him a unique perspective on the governance crisis facing AI today.

- The business imperative: "The analogy I like to draw is the public reporting a company has to do for its financials. You're supposed to get enough information to make an investment decision, and that's what we're talking about when buying an AI product. If you're not giving us that kind of transparency in what I call "the balance sheet of the tool", you won't really know what you're getting. You're effectively just throwing money at something and hoping for a return."

More than a clever metaphor, Weller’s "balance sheet" idea is a practical framework for due diligence in an age of autonomous systems. He argued that true transparency has two critical dimensions. The first is technical, requiring an understanding of how a model works. But the second, and more important, is applicational: understanding the full scope of what it could do.

- The two dimensions of transparency: "For me, that transparency is partly about the openness of the product itself, but I think it's also partially around how you get people to think about how this could be used," Weller explained. He pointed to recent examples, like a voice agent negotiating a phone bill, as crucial for helping organizations anticipate the real-world implications and potential misuse of the technology.

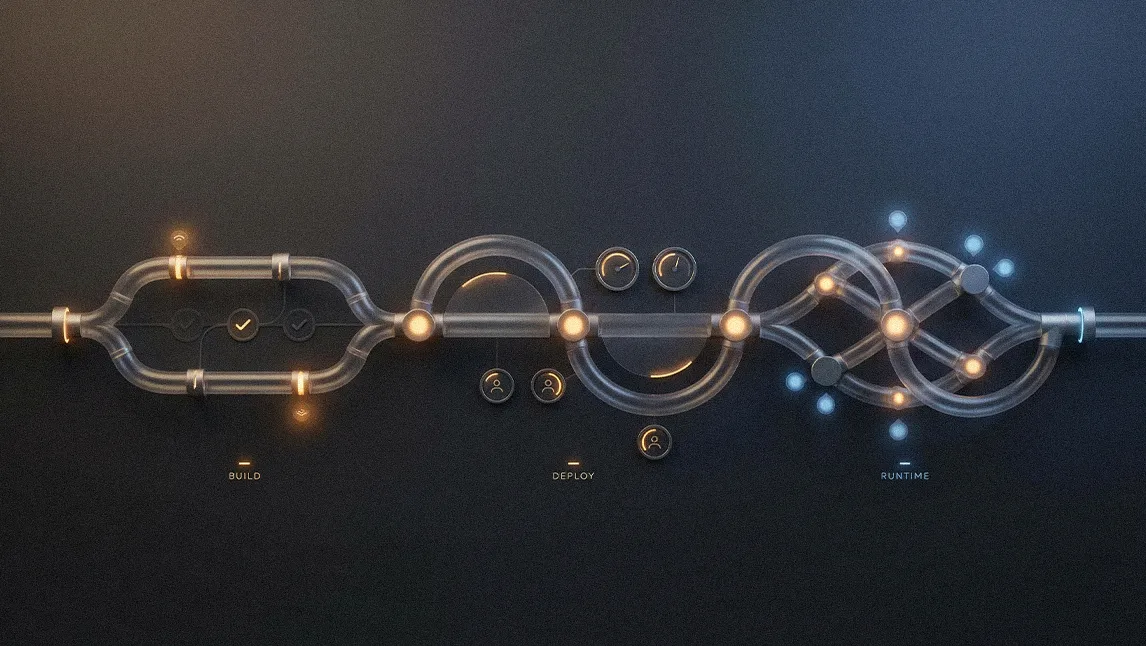

- Architectural resilience: "Most people are just trying to get a chatbot out, honestly. But we need to be able to drag-and-drop or find-and-replace a particular AI model without breaking the whole system. That redundancy in models creates resilience, just like we build resilience into our data center strategies."

This philosophy moved governance from a reactive, compliance-driven exercise to a proactive, strategic function. Weller pointed to a common organizational pitfall born from the frantic pace of innovation. "You have these teams who go off and do stuff, and then they'll bring us a use case and we'll say, 'This other team did exactly the same thing two weeks ago.' And they'll reply, 'Never heard of them.' So the challenge is, how do we find that balance between letting people innovate, but also making sure they're not wasting a bunch of time duplicating work?"

- Governance as an enabler: Effective governance, he argued, isn't about slowing innovation; it's about building a resilient foundation that accelerates it. This new perimeter for governance began at the point of entry. Weller’s team works directly with procurement to vet the explosion of tools that are suddenly adding AI features. But the ultimate backstop wasn't a rulebook; it was public accountability. HP practices what Weller preaches, having published its AI governance principles for the world to see, and worked to map them to controls and review processes to achieve both the promises and the proof.

- A higher standard: "One of our principles is that any AI we produce is going to be fair to customers. It's not going to recommend one product over another because one is more profitable for us. It should recommend the best solution for the customer based on their input. While this may not be explicitly required in all jurisdictions, considering the system from a customer perspective drives a different level of governance."

This internal focus on strategic governance was a direct response to an increasingly fragmented global landscape. As AI becomes critical infrastructure, it is no longer just a compliance problem; it's a sovereignty issue. Weller pointed to a world where national interests are creating a geopolitical minefield for any company operating globally.

The sovereignty issue: "Recent Chinese guidance imposes significant requirements on training datasets to train AIs. This guidance could be read that, effectively, for a training dataset to be approved for use in China it will need to be Chinese-origin data."

Weller's concerns about the high stakes are echoed by industry analysis. Research from firms like Gartner, for instance, has predicted that a significant percentage of enterprise AI initiatives could fail, making architectural flexibility a key survival trait. Weller argued the only viable path forward was to build for resilience, creating systems that can adapt to shifting geopolitical realities.

This need for flexibility will become even more critical as the market heads toward its inevitable endgame. While the current environment feels like a wide-open frontier flush with venture capital, Weller was clear that this was a temporary phase. Drawing a parallel to the early days of cloud computing, he predicted a coming wave of consolidation that would separate the winners from the losers.

Coming consolidation: "There's a lot of venture money being thrown at AI right now. There will be market consolidation. At some point you've got to get an ROI, right? Or shareholders or the VCs are going to come after you. You now have AWS, GCP, and Azure, the big three platforms. But if you go back 15 years, there were 100. So I do think there will be some consolidation at some point."

That inevitable consolidation makes it all the more critical to design an AI strategy with the same foresight and flexibility once applied to cloud. “Think about your AI strategy as you do your cloud strategy. Consider multi-vendor delivery with clear understanding of how to port data between them as capabilities and pricing models change. AI Governance should be about much more than compliance – it should allow your organization to navigate the risks, and the benefits, effectively.”

.svg)