- Many legacy organizations find that adopting AI exposes existing problems like disconnected systems and unclear data definitions.

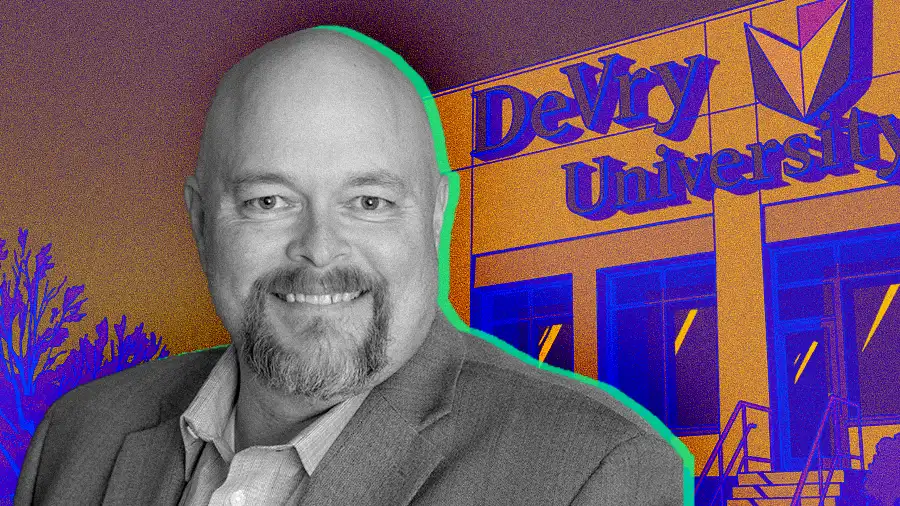

- Chris Campbell, Chief Information Officer at DeVry University, explained that success requires fixing internal misalignment before deploying AI.

- He established an executive-led governance model that aligned every initiative with business goals and proved that AI works best alongside people, not instead of them.

For many legacy organizations, integrating artificial intelligence is not simply a matter of new technology but of organizational readiness. Rather than solving existing problems, AI often exposes them, bringing long-standing issues like disconnected systems, competing priorities, and unclear data definitions to the surface. The real test lies not in deploying AI itself but in creating the alignment needed to make it work.

Chris Campbell, Chief Information Officer at DeVry University, believes the path to effective AI adoption begins with internal clarity and discipline. With more than two decades of experience leading digital transformation, Campbell has served as an advisory board member for strategic groups such as Evanta, a Gartner Company, and as Senior Director at Adtalem Global Education. He brings a clear perspective on what it takes to turn technological potential into measurable impact, and in his view, an organization must first get its own house in order before it can get AI right.

"If your own core dataset is confused, how can we hope for something like AI to interpret it appropriately? The truth is that every team sees data through its own lens. The definition of a customer may mean one thing to marketing and something entirely different to customer success. Until an organization agrees on what its data actually means, AI will only amplify the confusion that already exists," Campbell said. Campbell's core argument is that inconsistent data definitions and competing interpretations across departments undermine the ability of intelligent systems to deliver reliable value.

To combat this, Campbell championed the creation of DeVry's AI Lab, a governance body designed to elevate AI to a C-suite-level strategic priority. Leaders proposing an initiative must formally present how it will work, its ROI, and its potential gaps. Because the executive team approves projects as a unit, the result is that every initiative is cross-functionally aligned and tied to a clear business outcome.

Steering in the right direction: "We use a steering committee comprised of most of our executive team to ensure every initiative is aligned with our current strategies. It's a model we've used for a long time, and it works particularly well for governing our AI Lab," Campbell explained.

The power to say no: For the governance model to have teeth, it can't just be a rubber stamp. According to Campbell, it also has to serve as a quality control mechanism. "We were working with a partner on a self-service chatbot for employees, and it became obvious their team didn't have the necessary skills, even if the technology was okay. That's the kind of engagement we will terminate."

Fluency over features: "We're not shopping for AI; we're looking for AI fluency. We need tools that can think in the language of our enterprise, not just predict within it. I refuse to adjust our university's mission or KPIs to align with a tool based on a vendor's assumption that their AI is automatically the right way to do things." It's a reminder that true innovation is not about adopting the newest technology but choosing tools that understand and strengthen the organization’s own purpose.

But Campbell's disciplined approach also challenges the conventional "legacy vs. disruptor" narrative in AI. His strategy reframes decades of institutional knowledge, turning a perceived liability into a competitive advantage. The idea finds echoes among other tech leaders who advocate for reinventing processes to be truly AI-native.

Home-field advantage: He argues that the real value of AI comes from how well it fits the organization it serves, not from the technology itself. "AI itself is not the superpower; the real superpower is the context and knowledge of my ecosystem, my business, and my customer. Many AI vendors don't think that way. They approach it with the perspective that the AI itself is the secret sauce that will make everything better."

The human in the loop: "We're very clear: our philosophy is humans plus AI, not AI instead of humans. It's about keeping people in the ecosystem and making them more effective by augmenting their work with AI tools."

But does this philosophy actually work? At DeVry, the results speak for themselves. By leveraging its deep contextual understanding of the student journey, the university has deployed AI to drive new business and improve the student experience. A key example is DeVryPro, a professional education platform that demonstrates the effectiveness of its governance-led approach.

Innovation on a dime: "Our goal was to launch the new product line as lean as possible. We used digital agents to manage the entire process, from initial exploration to course sign-up, which allowed us to do it with next to no operational resources," Campbell explained. The result is a living example of governance-led innovation: an AI initiative designed around real institutional needs, proving that strategic alignment can achieve both speed and substance.

Ultimately, Campbell's message is one of pragmatic iteration. In an environment of constant change, he argued the goal is to build a resilient, aligned organization capable of continuous adaptation, not to seek perfection on day one. "Nothing is static," he said. "No matter how you deploy it, you're going to have to adjust it." It isn't just a philosophy; it's a practiced discipline.

"Our oldest AI application is eight or nine years old and actually predated our current governance. We recently brought it back under the AI Lab's purview to make several adjustments because, upon deeper inspection, it wasn't delivering the results we expected." The path forward, then, is less about finding a new tool and more about mastering the discipline to wield it effectively. "You have to figure out this new tool, just like you had to figure out how to use an iPhone," Campbell concluded. "AI is no different. It just takes time, curiosity, and a willingness to adapt."

.svg)