- Agentic AI is in a "stumbling" phase, with most enterprises finding it to be fragile and prone to errors.

- Manav Pandey, an AI Engineer at American Express, told CIO News why orchestrating small, specialized AI systems tends to be a more effective approach.

- He recommended integrating best-in-class tools and training small models rather than building them in-house.

- For high-stakes AI tasks, Pandey suggested aiming for 99.9% accuracy with classical algorithms rather than human-level benchmarks.

*The views expressed in this article belong to Manav Pandey and do not necessarily reflect the official policy of any organization.

The promise of agentic artificial intelligence is clear: autonomous systems that can automate entire workflows. But for now, the reality is often fragile, error-prone, and unstable. Aptly named the "stumbling" phase in a blog post from AI 2027, the term captures the tension facing many enterprises today: how to square the technology's disappointing results today with its immense potential tomorrow.

To make sense of this disconnect, we spoke with Manav Pandey, a Senior Machine Learning Engineer at American Express who specializes in foundation model training and cognitive architectures. With hands-on experience building the very systems enterprises are grappling with, he frames the current moment with a critical distinction. The path forward requires an approach that favors clever system design over the pursuit of ever-larger models, according to Pandey.

The algorithmic mimic: The most effective AI agents are those that mimic the stability and precision of smaller, specialized models, said Pandey. "The process is simple. Take one highly specific task, build a perfect dataset for it, and then train a small language model to be a world-class expert on just that one thing. This is how we build stable and trustworthy AI."

Right tool, right job: A pragmatic engineering principle grounds the philosophy for Pandey: use the simplest, most accurate tool for the job. "We need to apply a 'right tool for the right job' philosophy to agentic AI. For example, why use a massive, expensive large language model to make a simple decision between two tools when a classical classification model can do it with near-perfect accuracy for a fraction of the cost? Using the simplest, most effective tool is the foundation of good engineering."

Often caught between hype and prudence, many enterprises now follow a cautious strategy, says Pandey. Here, an intense focus on immediate ROI often informs the decision to "build vs. buy."

Buy, don't build: Most companies will get more value from adopting specialized AI tools from the market rather than trying to build them from scratch, according to Pandey. "The expertise required to create these systems is highly specialized and niche. A company like JP Morgan isn't going to build its own AI coding assistant from the ground up. It makes far more sense for them to use a proven tool from a company like Anthropic, OpenAI, or Google. That principle applies to most use cases."

Playing it safe: Such caution is a direct response to the high failure rate of enterprise AI pilots, said Pandey. "The safest and most effective way for a company to start with agentic AI is to focus on internal efficiency. These projects have the lowest risk and the clearest return on investment, which makes them the most logical place to begin. It's a simple trade-off between risk and value, and right now, the smart money is on making your own company run better."

Now, with a growing ecosystem of accessible tools available, focus is shifting to people, Pandey explained. In his experience, the most untapped value lies in the skilled practitioners using AI, not in the tool itself.

Mindset over matter: The ideal AI user is someone who knows how to use it as a force multiplier rather than a replacement for their own expertise. "The key employees driving AI adoption aren't necessarily the most 'hyper-technical'. They are the most 'hyper-adoptive.' These are the people who are willing to try new tools and figure out how to integrate them into their daily workflow. Often, it's the more junior employees who are most eager to use these tools because they see them as a way to augment their skills and speed up their work."

Automate the awful: The most effective strategy for driving adoption is a human-centric one, said Pandey. "To get people actually to use AI tools, you should focus on automating the parts of their job they hate. No one wants to automate the creative or strategic work they enjoy. The real value lies in eliminating the tedious, repetitive tasks they're inundated with, such as handling bureaucracy or organizing data. If you make their lives easier, you'll find much higher adoption."

So far, the prevailing answer for governance has been the "human in the loop." But that approach has a fundamental problem, Pandey said. It doesn't scale.

The human teacher: Instead, the path forward recasts the human’s purpose from a simple backstop to a valuable source of training data. "The most valuable role for a 'human in the loop' isn't just to be a safety net, but to be a teacher. Every time a human makes a decision or corrects the AI, they are creating valuable data. We can collect that data, build a specialized dataset from it, and then use that to train a much smaller, more efficient model to perform that one specific task perfectly, just like the human expert did."

But this reliance on measurable performance also leads to a crucial distinction: for high-risk, consumer-facing systems, even a human-level benchmark might be the wrong standard entirely.

The 99.9% rule: "For any high-stakes, customer-facing system, comparing the AI to a human is the wrong benchmark. A human makes mistakes. The ideal standard should be a traditional algorithm with 100% accuracy or a classical machine learning model with 99.9% accuracy. These systems are not only more accurate but also faster, more reliable, and much less expensive to run."

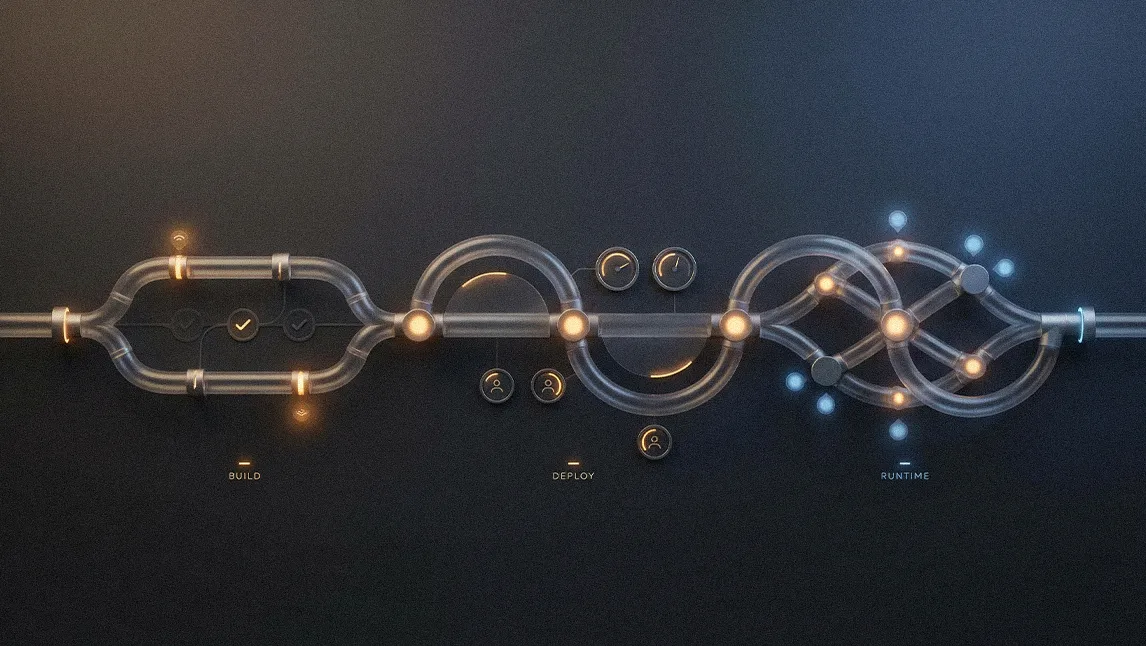

The "stumbling agent" issue is nothing but a solvable engineering challenge. Instead of a dead end, the inevitable "AI sprawl" of disparate tools is a problem that demands a more thoughtful system where classical algorithms and specialized models work in concert. "The future of agentic AI is just clever orchestration," he concluded. "But as the big players start integrating this technology and making it stable, and as the models improve at tool-calling, it's going to dramatically reshape the tech environment and society in general."

.svg)