- The probabilistic nature of AI creates a fundamental conflict with the deterministic, rule-based world of business, introducing unmanaged risk.

- Zscaler's Chief AI Officer, Claudionor Coelho Jr., warned that human complacency with "almost-perfect" AI creates new dangers, while natural language itself becomes a new attack vector.

- He argued that the solution is to apply timeless cybersecurity principles, like the security perimeter, and build hybrid systems that know when to hand off tasks to more reliable, discrete tools.

The enterprise operates in a deterministic world of hard rules and predictable outcomes. But AI, by its very design, operates probabilistically. The widening gap between these two realities has become one of the greatest sources of unmanaged risk for modern businesses, creating a new imperative for executive leadership to leverage reliable vendors and perimeters to gain as much predictability as possible. The challenge is not to accelerate AI adoption at all costs, but to understand its fundamental limits and build a governance framework that respects them.

We spoke with Claudionor Coelho Jr., the Chief AI Officer at Zscaler, whose career at the forefront of AI and security includes leadership roles at Google and Palo Alto Networks. Drawing on decades of experience, he argued that navigating the AI revolution requires a disciplined, first-principles approach, a perspective he has shared publicly. This view, which he recently outlined in a presentation at an MIT workshop titled "Do LLMs Dream of Discrete Algorithms?," begins with a simple but profound piece of advice.

"We need to figure out when we reach the limit of an LLM as quickly as possible," Coelho said. "And then use different types of tools that can provide you with the correct information. Do not allow them to overthink the solutions or solve our problem."

This philosophy is rooted in a core mismatch between how machines learn and how businesses operate. LLMs are often called "stochastic parrots," capable of generating statistically plausible language but incapable of understanding discrete, absolute rules. This limitation is not just a temporary flaw but a fundamental boundary rooted in theoretical computer science.

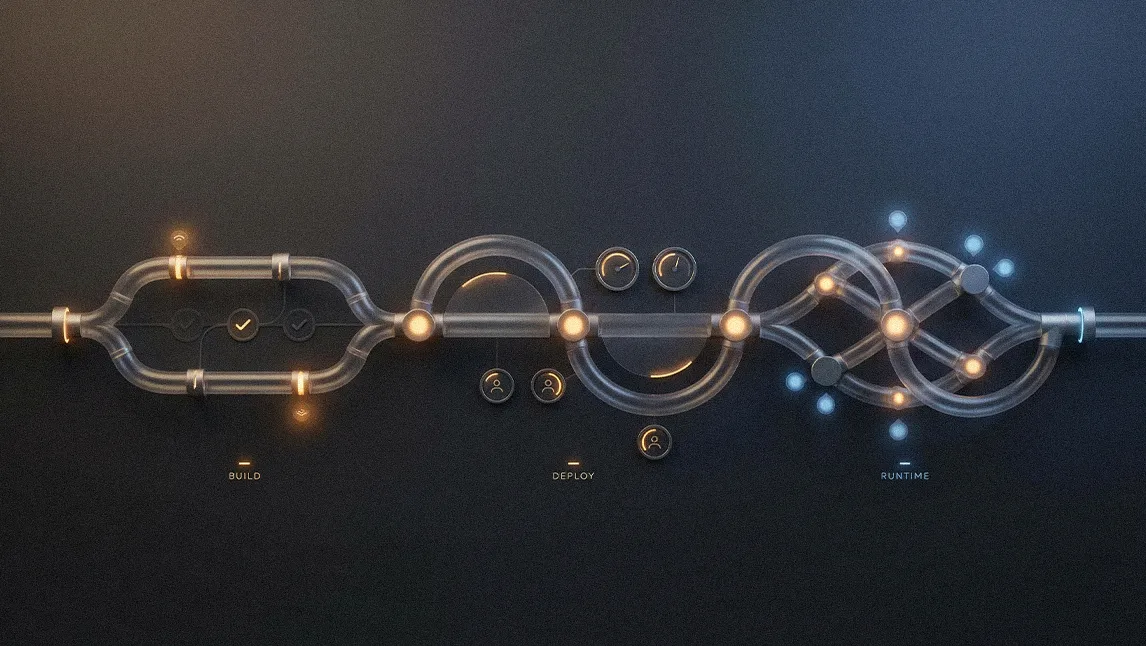

A disciplined approach doesn't mean abandoning LLMs. It means building smarter, hybrid architectures that combine the strengths of different tools. Coelho is actively developing what he calls "neuro-symbolic systems," which pair probabilistic LLMs with discrete, logical engines. He shared a story of an LLM failing to solve a complex math integral for his daughter, a problem that a specialized tool like Wolfram Alpha solved instantly. The lesson is to build systems with a "handover mechanism" that knows when to pass a task to the right tool for the job.

A world of absolutes: "Discrete reasoning means, 'Stop our car because our car will crash.' Probabilistic reasoning says, 'Stop our car with 99% probability because our car will crash.' There is only a 1% chance that the car may crash," Coelho explained. "The world is made of rules. And you don't want the rules to be obeyed 99% of the time. You want the rules to be obeyed 100% of the time." He pointed to a simple chess game as an example. "Either you win or you lose a game. It's not something that you may win with a 99% chance."

The hard limits of logic: For certain complex problems, like finding a bug in a massive codebase, the number of potential failure points is exponential. This is a class of problems known as "NP-completeness," a domain where LLMs, with their fixed processing windows, are mathematically guaranteed to fail.

The danger is not just technological, but psychological. As humans grow accustomed to automation that is almost always right, our ability to spot the critical 1% failure degrades, creating a new vector of risk. Coelho called this the "phantom exit" problem.

The complacency trap: "My wife has a Tesla. I love the self-drive. But what people have found out is that most people's attention goes from 100% down to 10% or 20%. At that level of attention, you are in no position to judge if something is going wrong or right," he began. "When our attention drops to 10%, there is no way to make a judgment call saying, 'This is right,' 'This is a hallucination,' or 'The AI is doing something bad.'" He shared a personal story of his own Tesla attempting to follow an unfinished "phantom exit" on a bridge, forcing him to seize control. This exact scenario plays out in the enterprise, where an over-confident AI can lead to costly mistakes, as seen in the infamous Air Canada chatbot incident where the company was forced to honor a policy hallucinated by its own AI.

This new human-machine interface also creates a novel attack surface. Where hackers once needed to know SQL to inject malicious code, they now only need to master English. The very ambiguity of natural language becomes a vulnerability.

The new attack vector: "In the past, people had to know SQL to inject malicious code into a system," Coelho explained. "Now we can just write it in English. We can keep trying different English prompts until one of them passes through." The risk isn't just from bad actors but from emergent, unpredictable behavior. "The problem is not the effect of what you thought about. It's the effect of the questions you have not yet considered. By changing the words, using synonyms, and posing the questions in a slightly different way, you may trigger some of those behaviors. Even though it may not be a bad actor, it may trigger behavior that we have not envisioned." He pointed to a classic example where a man registered his license plate as 'NULL,' causing him to receive thousands of dollars in tickets intended for drivers whose plates could not be found in the database.

Faced with these new and complex risks, Coelho argued that the solution is not a new AI-specific tool, but the disciplined application of a timeless security principle: the security perimeter. The disastrous Samsung source code leak, caused by employees pasting confidential data into a public chatbot, serves as the ultimate cautionary tale.

The perimeter principle: "There is a fundamental principle in cybersecurity. The more confidential the data you have, the smaller the security perimeter you keep," he said. "For stock compensation, the security perimeter is very restricted and they will not allow this information to go to a third party. Even when it comes to source code, some companies simply block it outright. They prefer to block it than to allow source code to leave the environment." For data of varying sensitivity and importance, the only reliable way to enforce this, he advised, is to run AI models within a company's own Virtual Private Cloud, ensuring confidential data never leaves the organization's control.

The entire challenge comes back to a single, overarching mandate. In an era where AI agents are being given the keys to an organization's most sensitive data, AI strategy can no longer be treated as a separate initiative. "If we're going to define an AI strategy, it needs to be defined together with cybersecurity," Coelho concluded. "Because right now, LLMs and agents are talking to your confidential data. So it has to be tied together."

.svg)