Advanced agentic AI protocols are still largely underdeveloped, posing challenges for digital architecture and security.

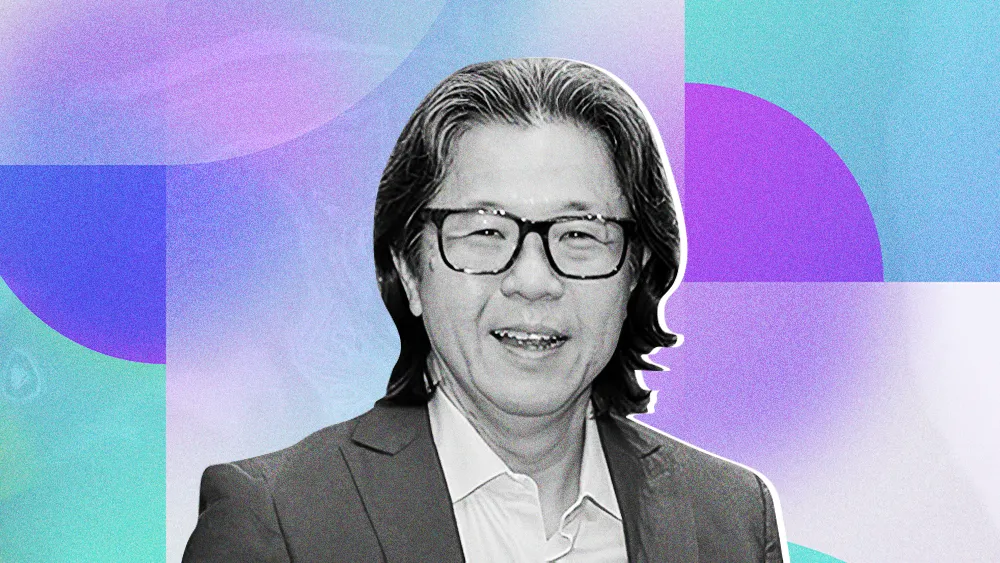

Cisco's Christopher Chew joined us for an interview to discuss the current state of AI protocols, and the need for a socio-technical ecosystem.

Chew highlighted the "Holy Triad" of technology goals: faster, cheaper, or more efficient, noting the illusion of cost savings in AI.

Chew advocated for a multi-lens human-led approach to AI, considering cybersecurity, regulation, and end-user perspectives.

Behind the curtain of AI promise and proliferation resides a surprising reality. As advanced automation and generative AI solutions drive consumer-friendly utility and enterprise-grade ROI faster than any previous technology, the underlying infrastructure and protocols supporting many of these systems are still in a nascent stage. The protocols powering agentic AI extend beyond the capabilities of standard APIs. They embody human-inspired characteristics that allow for more flexibility in workflows.

Major foundational players are rushing to establish the dominant protocols that will govern the coming wave of intelligent agents. But this hyper-technical arms race is just one theater in a much larger contest for AI supremacy. The conditions are staging a complex battleground for the future of digital architecture, security, and trust.

We spoke with Christopher Chew, a senior technical leader at Cisco and a key board member for the IAPP’s Asia and Privacy Engineering advisory boards. Chew recently wrote an essay exploring some of differences between the emerging Agent Network Protocol (ANP) and Model Context Protocol (MCP). Drawing on over two decades of experience at the intersection of technology and digital trust, he argues that the industry’s current approach is fundamentally flawed.

A 3D puzzle in a 2D world: "If we're looking at new AI protocols as the 'best thing since sliced bread,' I think we're missing the point," Chew warned. "We are trying to solve a 3D puzzle in a two-dimensional way, and that's just wrong." Protocols, according to Chew, are a means to an end. The systems they support and the culture of measured adoption is the greater indicator of success or failure.

For Chew, the real story isn't about any single protocol, but the entire socio-technical ecosystem it inhabits. To understand this ecosystem, you have to go back to first principles. The current moment is just the latest chapter in a story that began with the personal computer, which "disaggregated" compute power from centralized mainframes and unleashed a relentless cycle of innovation.

The Holy Triad: "With new technology, there's a holy triad of goals: you want to make things faster, cheaper, or more efficient," Chew explained. "The rule is simple: you can choose any two, but you can never have all three." In the case of AI, he noted, the promise of "cheaper" is often an illusion. This drive for disruption has led to a fundamental conflict. Businesses are desperate to monetize our personal data, but they are building on broken under-governed foundations.

An insecure foundation by design: "The Internet was not built to be secure," Chew stated. "If you talk to Vint Cerf, he'll say that the objective was to connect everybody. It's not built with security in mind, certainly not with privacy in mind."

This inherited flaw is the central problem today's leaders must confront, which brings the focus back to the one variable that is both the problem and the solution: us.

The human duality: "Humans are usually the weakest link when it comes to cybersecurity," Chew said. "But when it comes to forward-looking thought leadership, we thrive." This duality—our capacity for error and our unparalleled genius for innovation—is the engine driving both the chaos and the opportunity. Navigating it requires a new way of thinking, a framework Chew uses to separate today's tactical fires from tomorrow's existential crises.

Chew urged a mindset shift: don't just solve today's problem, but anticipate tomorrow's. This is formalized in a "Horizons" framework he practices in his deep enterprise solutions engineering roles. Chew separates immediate challenges (Horizon 1) from the long-term, strategic work of building digital resilience.

The Horizon 3 burden: "A lot of what we do that keeps us awake at night is Horizon 3—what's really in the future," Chew reflected. "And how can we shape that? How can we transform that in a meaningful way, in an ethical way as it comes to AI?" For Chew, it's all about security at scale, and retaining humans-in-the-loop until autonomous agentic AI systems come to fruition (if ever).

But many organizations remain stuck in Horizon 1 when it comes to AI, unable to look past the immediate goal. Chew identified several reasons for this disconnect, starting with a tendency to chase trends.

Maslow's AI hammer: "If you carry a hammer in your hand, very soon every problem will start looking like a nail," he said. "And that's the feeling I'm getting with agentic AI." This is compounded by misaligned incentives, a lack of skilled personnel, and a persistent communication gap between technical experts and the C-Suite.

A multi-lens journey: Chew knows the challenge firsthand, often feeling like the "voice of reason" while advocating for caution-infused innovation. "I'm a cybersecurity guy. I look at it from a cybersecurity lens, but I've taken a step back in recent years and said, 'Have I looked at it from a regulatory perspective? Have I looked at it from a governance perspective? What about from an end-user perspective?'"

Moving to long-term environmental implications, Chew said the stakes of this long-term, multi-lens thinking are immense. True digital resilience means confronting the magnified security risks of agentic AI, the staggering energy costs that may require "mini nuclear reactors," and the geopolitical minefield of data sovereignty. Because contexts are so vastly different—from state surveillance to B2B security—he argued the future must be a hybrid of approaches, because "use case is key." The path forward is not a simple technological fix, but a human one that demands a new kind of leadership.

It circles back to what Chew calls the "frailty of the human condition." He reflected on the toughest job of all: managing people, and "managing up" the leadership. Real progress, he suggested, comes not from demanding perfection, but from having the wisdom to let people safely learn by doing over time.

*The views expressed by Christopher Chew in this article are his own, and do not reflect those of his employer.

.svg)